OpenAI’s Sora app has been wowing the public with its ability to create AI-generated videos using people’s likenesses, but the company admits the technology carries a small risk of producing sexual deepfakes.

OpenAI mentioned the issue in Sora’s system card, a safety report about the AI technology. By harnessing facial and voice data, Sora 2 can generate hyper-realistic visuals of users that are convincing enough to be difficult to distinguish from lower-quality deepfakes.

OpenAI says Sora 2 includes “a robust safety stack,” which can block it from generating a video during the input and output phases, or when the user types in a prompt and after Sora generates the content.

Even with the safeguards, though, OpenAI found in a safety evaluation that Sora only blocked 98.4% of rule-breaking videos that contained “Adult Nudity” or “Sexual Content” using a person’s likeness. The evaluation was conducted “using thousands of adversarial prompts gathered through targeted red-teaming,” the company said, adding: “While layered safeguards are in place, some harmful behaviors or policy violations may still circumvent mitigations.”

(Credit: OpenAI)

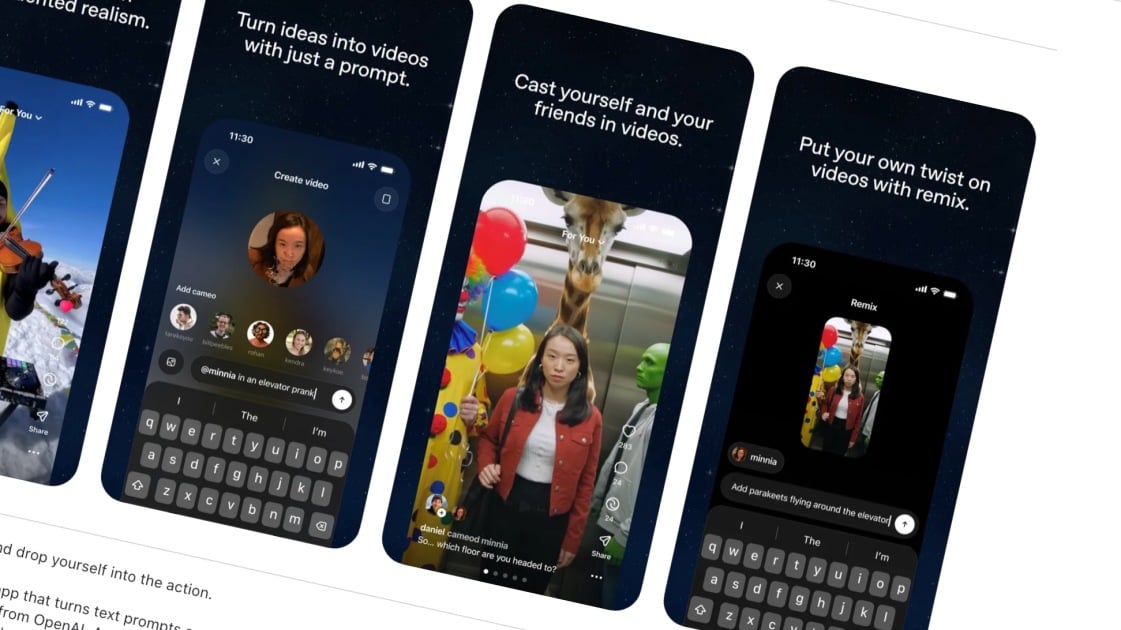

Although the risk is small, it could be traumatic to see yourself in a sexual deepfake — a problem that’s plagued women for years. The Sora app can create a video based on your likeness through its “Cameo” feature; other people can use your face, too, with your permission.

OpenAI’s system card notes that the Sora app features “automated detection systems that scan video frames, scene descriptions, and audio transcripts aimed to block content that violates our guidelines,” which can presumably prevent a sexual deepfake from circulating on the app.

Get Our Best Stories!

Your Daily Dose of Our Top Tech News

Thanks for signing up!

Your subscription has been confirmed. Keep an eye on your inbox!

“We also have a proactive detection system, user reporting pathways to flag inappropriate content, and apply stricter thresholds to material surfaced in Sora 2’s social feed,” OpenAI adds.

Still, details are scant about how OpenAI handles and retains the facial and audio data that users upload for the Cameo feature. The company’s privacy policy doesn’t directly address the Sora app and facial data. But in a blog post, OpenAI says: “Only you decide who can use your cameo, and you can revoke access at any time.”

“Videos that include your cameo—including drafts created by other users—are always visible to you. This lets you easily review and delete (and, if needed, report) any videos featuring your cameo,” the blog post adds. “We also apply extra safety guardrails to any video with a cameo, and you can even set preferences for how your cameo behaves—for example, requesting that it always wears a fedora.”

Recommended by Our Editors

OpenAI didn’t immediately respond to a request for comment. However, access to Sora is currently limited to a select number of users through an invite-only system. The app has also launched on iOS without support for video-to-video generation or text-to-video generation of public figures. In addition, the app blocks video generations of real people who haven’t given consent for their likeness to be used in Sora’s Cameo feature.

“We’ll continue to learn from how people use Sora 2 and refine the system to balance safety while maximizing creative potential,” the system card adds.

Disclosure: Ziff Davis, PCMag’s parent company, filed a lawsuit against OpenAI in April 2025, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.

About Our Expert

Michael Kan

Senior Reporter

Experience

I’ve been a journalist for over 15 years. I got my start as a schools and cities reporter in Kansas City and joined PCMag in 2017, where I cover satellite internet services, cybersecurity, PC hardware, and more. I’m currently based in San Francisco, but previously spent over five years in China, covering the country’s technology sector.

Since 2020, I’ve covered the launch and explosive growth of SpaceX’s Starlink satellite internet service, writing 600+ stories on availability and feature launches, but also the regulatory battles over the expansion of satellite constellations, fights with rival providers like AST SpaceMobile and Amazon, and the effort to expand into satellite-based mobile service. I’ve combed through FCC filings for the latest news and driven to remote corners of California to test Starlink’s cellular service.

I also cover cyber threats, from ransomware gangs to the emergence of AI-based malware. Earlier this year, the FTC forced Avast to pay consumers $16.5 million for secretly harvesting and selling their personal information to third-party clients, as revealed in my joint investigation with Motherboard.

I also cover the PC graphics card market. Pandemic-era shortages led me to camp out in front of a Best Buy to get an RTX 3000. I’m now following how President Trump’s tariffs will affect the industry. I’m always eager to learn more, so please jump in the comments with feedback and send me tips.

This article was published by WTVG on 2025-10-01 14:51:00

View Original Post